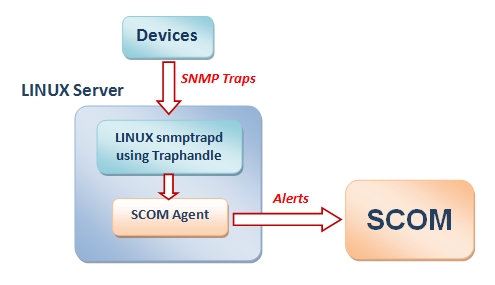

It is possible to configure SCOM to act as a syslog server. You need to instruct all the Linux machines to send the events to this server and finally you can define SCOM rules generating alerts according to syslog events, check

this link

Using this architecture, the Linux machines send syslog events to SCOM as snmp traps, these events are then filtered in order to generate the alerts. Because the number of those traps can be

huge, this sometimes causes performance issues, besides greater complexity.

If you have SCOM already monitoring Linux then you can use it to monitor syslog and there should be several ways of doing it... I'm describing mine but I'm open to suggestions, advises and criticism.

STEPS:

1- Configuring syslog

First I configure syslog at each Linux machine to also write messages to three new files, according to their importance: critical, warning or information. These files will later be processed by SCOM.

1.1- Creating the log directory

As monuser user I create the log directory at the monuser's home. Normally the monuser's home is "/home/monuser":

cd /home/monuser

mkdir log

1.2- Setting permissions for the log directory

As superuser:

chgrp root log

chmod 660 log

1.3- Configuring syslog

As superuser, I add the following lines to /etc/rsyslog.conf (or to /etc/syslog.conf if /etc/

rsyslog.conf doesn't exist):

# -- SCOM definitions

#

# Log every msg with priority greater or equal to ERROR, as SCOM CRITICAL

*.err;mail.none;auth.none;authpriv.none /home/monuser/log/SCOM.Critical

#

# Log every msg with priority WARNING, as SCOM WARNING

*.=warn;mail.none;auth.none;authpriv.none;cron.none /home/monuser/log/SCOM.Warning

#

# Log every msg with priority NOTICE, as SCOM INFORMATION

*.=notice;mail.none;auth.none;authpriv.none;cron.none /home/monuser/log/SCOM.Information

In this case syslog's information type messages are ignored because they too often generate a vast number of entries.

This syslog configuration will register events in three files, based on the criticality:

/home/monuser/log/SCOM.Critical

/home/monuser/var/log/SCOM.Warning

/home/monuser/var/log/SCOM.Information

1.3 - Restart the syslog service

The exact command depends on the Linux Operating System, in my case is (as superuser):

service rsyslog restart

1.4 - Change the permissions of the log files

After restarting the syslog service I have the following files at /home/monuser/log :

SCOM.Critical

SCOM.Warning

SCOM.Information

As superuser I execute:

chown monuser /home/monuser/log/SCOM.*

chmod 660 /home/monuser/log/SCOM.*

2- Creating a shell script to manage the logs

This shell script will be later executed via SCOM, to read and process the logs.

The shell script is:

# -- 1st Parameter is the logfile, for instance: /var/log/SCOM.Critical

flog="$1"

# -- File has data?

if [ ! -f "$flog" ] || [ ! -s "$flog" ]; then

exit 1

fi

# -- Move file

cp "$flog" "$flog.sent";

> "$flog"

# -- Send the output to SCOM

cat "$flog.sent"

exit 0

I ended up converting script to a single line, so it can be later directly inserted into a SCOM rule:

In the case of critical alert, the line would be:

flog="/home/monuser/log/SCOM.Critical"; if [ ! -f "$flog" ] || [ ! -s "$flog" ]; then exit 1; fi; cp "$flog" "$flog.sent"; > "$flog"; cat "$flog.sent"; exit 0

3- Defining the SCOM rules

Finally I specify three different rules, for critical, warning and information alerts.

Creating the first rule, the others are similar:

The script is sent in a single line:

The criteria is only RerturnCode Equals 0:

This inserts at the Alert Description all the script's output:

4- Testing

As a superuser, at the linux machine, enter:

Testing a critical alert:

logger -p user.err "TEST Error Msg"

Testing a warning alert:

logger -p user.warning "TEST warning Msg"

Testing a information alert:

logger -p user.notice "TEST Error Msg"

4- Further developing

What if you want to create an alert that depends on a specific syslog event?

One possibility is to create a rule that searches inside the file left over by the main rule... For instance, the rule "Syslog critical messages" creates a file named 'SCOM.critical.sent', so further rules can search this file for a certain regular expression.

Example of a script (not tested):

# -- Where to find

flog="/home/monuser/log/SCOM.Critical.sent";

# -- What text to find, for instance, "hardware"

find="hardware"

# -- Define an unique preffix for this rule

name="hw"

# -- File having the found events

flog2="$flog.$name"

# -- File has data?

if [ ! -f "$flog" ] || [ ! -s "$flog" ]; then

exit 1

fi

# -- There's new data to process?

if [ ! -f "$flog2" ] || [ "$flog" -nt "$flog2" ]; then

grep "$find" "$flog" > "$flog2"

if [ -s "$flog2" ]; then

cat "$flog2"

exit 0

fi

fi

exit 1

This script can then be executed from a rule with a shorter time interval than "Syslog critical messages", to be able to process the leftover file. For instance, if the main rule is executed each 15 minutes then the rules looking for a certain text should be executed each 12 minutes.